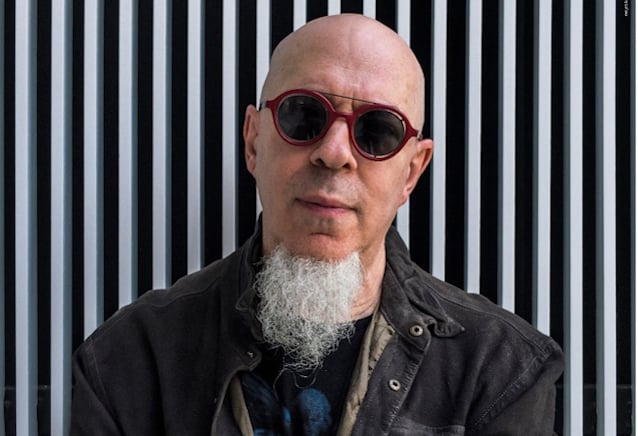

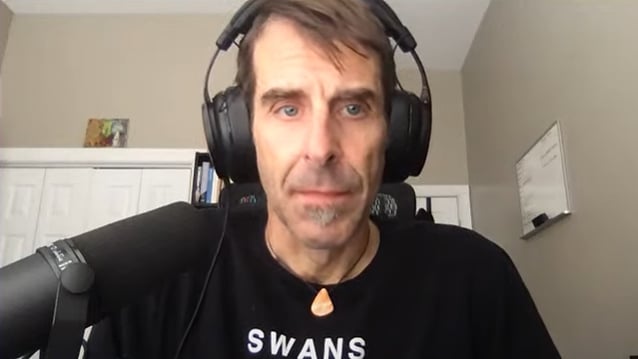

DREAM THEATER keyboardist Jordan Rudess has once again weighed in on a debate about people using artificial intelligence (A.I.) to create music. Asked by “#UpgradeMe With Dana Leong” to clarify his feelings about this new technology, Jordan said (as transcribed by BLABBERMOUTH.NET): “There’s a lot of confusion, there’s a lot of fear about A.I., there’s a lot of excitement about it. It’s all over the map, and there’s a lot of energy being put behind it. So it’s a tricky world that we live in right now. I don’t think I’ve ever been so into something that has been so like debatable. I could mention to somebody that, ‘Oh, I’m working in A.I. music making.’ ‘Oh, that’s the devil. You shouldn’t be doing that. And ‘I’m gonna ban Jordan Rudess from my life, because he’s working on destroying the world.’ So there’s that to contend with.”The area that I’m working on, even though I’m working in a facility like MIT that’s completely positive and everybody doing work there in A.I. is very inspired and just doing magical things, there’s a reality to the world and the way that a lot of people are viewing A.I. So that should be said first,” he explained. “It’s not with a blind eye that I go into this space, because I think about it all the time.”My whole life has been spent following my creative journey, following my inspiration, practicing my instruments and my art form and trying to master my craft. The things I’m interested in are things like entertaining, entertainment, I’m interested in education, I’m interested in raising the level of inspiration for myself and others around me as much as I can. And I’m interested in any tools that allow me to do that. So I feel like with A.I., my focus there is on those elements. I wanna use A.I. and figure out how can I help somebody with the next level of ear training. What can we do? People are so interested to learn ear training to get better at playing their instruments and learn where the notes are on a piano or trumpet, a violin, a cello, a voice or whatever. How can we do that with A.I.? There’s a lot of really cool ways we can do that.”Rudess added: “There’s a lot of interesting ways to think about A.I., not only as a generator of music, riffs, sound, but also as a technology that can be a partner, be a collaborator as well. And even a technology that can predict what you’re gonna do. So in my mind, in my experience, this technology opens up the next level of possibility for all the cool, all the fun things that I wanna do with my music. I can have a next-generation jam partner that is sensitive to what I’m playing and offers me interesting ideas. I can have an educational tool that I’m kind of helping to build. For me, it’s about the smiles and the inspiration and the positivity and the sound and the journey and learning. And again, just for anybody out there that is thinking that I’m connected with the devil, I am not saying any of this with a blindness to composers’ rights and all the things that that musicians are worried about that makes sense to worry about, ’cause they’re real. But as a single human being in the space, I think somebody… Let me put it this way: everybody has to decide where are you with technology? What are you doing? Are you working towards something that’s magical, cool, educational, entertaining, great and inspiring or are you using it in a way that is mundane, hurtful, something that will just produce content for the sake of it? It’s a choice.”So those are my feelings about it,” Rudess concluded. “I don’t think that A.I. is gonna destroy the world any more than all the technology we had before it will. I mean, in the wrong hands all technology can be dangerous — the next level of power, certainly. But anyway, my focus is doing what I think are cool things with it… More colors, more sounds, more visuals, more cool.”During the same chat, Rudess was asked to reflect on the “strangest gig” he has ever played. He responded: ”Oh, that’s a funny, that’s an interesting thing. I’ve had so many different kinds of gigs and I’ve had weird things happen. I could point out something that very unusual that happened at one of my gigs. It might be fun for our listeners, which is that I was in Mexico City doing a DREAM THEATER concert. And I have this keyboard stand that actually goes around kind of like a 360[-degree] thing and it tilts and moves in all kinds of interesting ways. So about 20 minutes into the show, we were at the national auditorium there in Mexico City, I went into my lead, the section, and I tilted my keyboard like this, so I’m kind of bending over and the keys are facing the audience. And I did my lead and everybody was, like, ‘Oh, that’s cool.’ Then I went to hit the button that brings it back to the keyboard to be flat and it didn’t come back. So my tech came out and he’s got a big wrench and he’s trying to get the keyboard to come back and he’s working as hard as he can, but he could not get that thing. It was locked in this position. And I played the whole rest of the show, bent over, all the different parts. Playing lead that way is one thing, but trying to play piano parts, that was another [thing]. And I was just sitting there playing the whole show like that in front of, like, 10,000 people, and every time a part would come up, I’d go, ‘God, can I play this part in this position or not?’ And I got through the whole show, and it was so weird. And then I was bent over and I was having trouble getting my body to come back to normal. I needed a chiropractic adjustment after that. It was very odd. It was definitely one of the stranger shows.”Rudess is collaborating with the MIT Media Lab’s Responsive Environments research group. Rudess’s main partners in the enterprise are Media Lab graduate students Lancelot Blanchard, who expolores musical applications of generative A.I. (informed by his own studies in classical piano),and Perry Naseck, an artist and engineer specializing in interactive, kinetic, light- and time-based media. Overseeing the project is Joseph Paradiso, Alexander W. Dreyfoos (1954) professor at the Media Lab, and head of the Responsive Environments group, whose team has a tradition of investigating musical frontiers through novel user interfaces, sensor networks and unconventional data sets.Rudess is a keyboardist known for his work with the platinum-selling, Grammy-winning progressive metal band DREAM THEATER, which embarks this fall on a 40th-anniversary tour. He is also a solo artist whose latest album, “Permission To Fly”, was released on September 6; an educator who shares his skills through detailed online tutorials; and the founder of software company Wizdom Music. His work combines a rigorous classical foundation (he began his piano studies at Juilliard at age 9) with a genius for improvisation and an appetite for experimentation.Last September, Rudess was asked by Italy’s Metal.it if he has any fears about A.I. Jordan said: “One of the companies that I work with very closely is called Moises. It’s a very interesting company. They’re mostly known for their work in track separation, and musicians all around the world use it. Because basically the way that it works is that you can upload to their system a stereo audio file or even a video, and then when it’s within their system, the system can take it apart. So let’s say you upload a DREAM THEATER song, and then when it comes back to you, you can decide: I wanna have the vocals, the piano, the bass, the drums, the lead guitar, the acoustic guitar. You can separate it. It’s like having access to all the individual tracks. It’s kind of a modern-day musical miracle, really, the way that it works. And there’s some different companies that work in track separation, but Moises is pretty much the top of the game. It’s incredible. But not only that. So after they kind of put their track separation out into the world, then they started to develop the system even further, to the point where now it does things like — well, first of all, pitch and time are completely flexible. You can have things playing back at different pitches, but maintain the tempo of the music. But it also has gotten really good at showing you the chords exactly on the beat where they fall in the music, and it’s getting very accurate as well, which is really great. Plus the idea or the implementation of being able to separate the sections of the music so somebody who’s learning a piece can say, ‘I just wanna hear the verse,’ and loop the verse, maybe the verse into the bridge and connect those two and loop them seamlessly.”He continued: “The reason I bring them up is because I love the technology but also because all the musicians around the world that use this, some of them have this idea that A.I. is a bad thing, but they don’t even know that Moises is built on A.I. This is the technology that is the framework for everything that Moises does. I’m sorry to be the bringer of bad news to somebody who’s against A.I. but now learning a piece of music using it.”Addressing the original question, Rudess added: “First of all, I believe more and more that artificial intelligence is a very bad name for this technology. There’s a lot of other ways to think about it. There’s nothing really artificial about it. We should go back in time, really, and change that. It’s leading people down the wrong thought process to think about what’s actually going on with the tool and how these things are put together.”He continued: “All that said, there’s a lot of concern in the music business from people about their rights, how these models have been instructed or loaded in with different songs and styles, and rightly so. People, musicians are concerned. They feel like this is not maybe fair. Maybe their music is being loaded into a computer without their permission. All these kinds of things are really, really important to figure out. This is all legal matters which need to be resolved in a big way. It’s gonna take time, and honestly, even though I am aware, sympathetic and also concerned, this is not my specialty, that kind of thing. My specialty is in thinking, okay, well, you have this new technology. How can we use it to make more expressive instruments? How can we use it to have more musical fun? How can we engage more people into the beauty of making music themselves? And how can I use it to just kind of like extend what I do in the musical world and do things that have never been possible before, and also be one of the people who kind of helps to steer it in directions that are most valuable to the future of music and music technology. So that’s really important to me. That’s one of the reasons I’m really excited about the work that I’m doing with MIT [Center for Art, Science & Technology], because they have some beautiful minds there that are thinking in positive ways about how you can take this technology, the technology that’s available to us today, and do amazing, beneficial, cool, important, educational, entertaining things with it.”[embedded content]

/

January 12, 2025

DREAM THEATER’s JORDAN RUDESS: ‘I Don’t Think A.I. Is Gonna Destroy The World Any More Than All The Technology We Had Before It Will’